data transfer rate (DTR)

What is a data transfer rate (DTR)?

The data transfer rate (DTR) is the amount of digital data that's moved from one place to another in a given time. The data transfer rate can be viewed as the speed of travel of a given amount of data from one place to another. In general, the greater the bandwidth of a given path, the higher the data transfer rate.

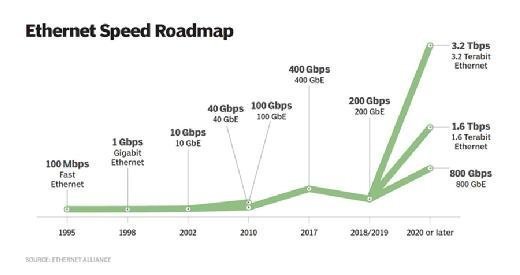

The DTR is sometimes also referred to as throughput. In telecommunications, data transfer is usually measured in bits per second. For example, a typical low-speed connection to the internet may be 33.6 kilobits per second. On Gigabit Ethernet local area networks, data transfer can be as fast as 1,000 megabits per second (Mbps). Newer network switches can transfer data in the terabit range, such as the Silicon One G100 switch from Cisco, which offers a DTR of up to 25.6 terabits per second (Tbps). In earlier telecommunications systems, data transfer was measured in characters or blocks of a certain size per second.

The data transfer rate varies between the type of media used, such as fiber optic cable, twisted pair or USB. For example, USB 3.0 and 3.1 have a data transfer rate of 5 gigabits per second and 10 Gbps, respectively.

In computers, data transfer is often measured in bytes per second. The world record for the highest data transfer rate was set by Japan's National Institute of Information and Communications Technology in 2021 when it delivered a long-distance transmission of data at speeds of 319 Tbps over 1,864 miles.

How to calculate a data transfer rate

It's easy to find the data transfer rate of any given data. DTR can be measured in different units depending on the situation, but typically, the following formula is used to calculate it:

DTR = D / T

Here, DTR is the data transfer rate, D is the total amount of digital data transferred and T is the total time it takes to transfer it.

For example, to find the data transfer rate of a 100 Mbps file that's transferred in two minutes, perform the following steps:

- Convert the time into seconds. Since the transfer takes two minutes to complete, the total time in seconds is 2 x 60 or 120 seconds.

- Determine the DTR. By using the above-mentioned formula, the DTR can be calculated as follows:

DTR = D / T or DTR = 100 / 120, where 100 is the size of the given file. Therefore, the DTR of the file transfer is 0.83 Mbps.

How to test a data transfer rate

There are a variety of online tools and speed tests that users can employ to test the speed of their internet connection. Most users test internet speeds to confirm that their internet service provider is offering the promised speed.

The speed tests typically work by checking the download and upload speed, as well as any latency that might take place during the transfer. For example, Speedtest by Ookla measures a device's internet connection speed and latency against several geographically dispersed servers.

What is the importance of data transfer rates?

Data transfer speed matters significantly when it comes to modern business networking, which requires the transmission of excessive amounts of data. DTR is also important for service providers to offer efficient data transfer rates so customers can access products and online services without delays. Data transfer rates also play an integral role for downloading applications or streaming complex applications. A good download speed is considered to be 100 Mbps, whereas a good upload speed is at least 10 Mbps. A speed of 100 Mbps is sufficient for streaming videos, attending Zoom meetings or playing multidevice games on the same network.

What affects data transfer rates?

Many factors can affect the speed of a data transfer or the quality of an internet connection. To avoid a dip in data transfer rates, an organization should consider the following factors:

- Network congestion. This occurs when the traffic passing through a network reaches its capacity and causes a drop in the quality of service (QoS). The drop in QoS can come in the form of packet loss, queuing delays or the blocking of new connections. For the end user, network congestion often results in a slow data transfer or a sluggish internet connection. Depending on the level of delay generated by the network congestion, users might also experience session timeouts and higher time to live during ping tests.

- Network latency. This is the total period of time that a data packet takes to travel from the source to the destination. The latency depends on many factors, such as the number of devices or hops to be traversed, the performance of the network devices, such as routers and switches, and the actual physical distance between the source and the destination. The latency also depends on the transfer protocols being used for the data transfer. For example, User Datagram Protocol doesn't affect latency, as it's connectionless, whereas Transmission Control Protocol (TCP) directly affects latency, as it's connection-oriented and requires acknowledgment or receipt of transfer.

- Inadequate hardware resources. A client or a server that doesn't have sufficient hardware resources, such as processing power, hard drive, input/output and RAM, can affect the data transfer rate for the entire network. A system running low on resources can slow down user queries and the data transfer rate using standard TCP processes.

- Filtering and encryption. A network filter, such as a firewall, can affect the data transfer rates of a network, depending on the processing time it requires to scan the network for viruses. Excessive processing time can generate packet loss and retransmission of data and can also slow down the rate of data transfer between source and destination.

- Traffic prioritization. This is also known as class of service, as network traffic is classified into certain categories, including high, medium and low priority. Critical and time-sensitive applications, such as voice over Internet Protocol and video conferencing, typically get a high priority and are guaranteed a certain amount of uplink bandwidth at all times compared to other applications on the same network path. If network congestion occurs, the low-priority traffic starts to experience packet loss, disconnects or experiences low data transfers, depending on the severity of the congestion.

- Load balancing. This networking technique efficiently distributes traffic across a pool of servers. Sometimes, these devices or load balancers may be misconfigured, which can result in packet loss, slower data transmission rates or retransmission of packets.

- Transmission medium. The DTR differs from one medium to another. For example, the DTR for an optical fiber cable is different from a twisted-pair cable, and the DTR between various USB devices is also different. For example, USB 1.0 has a data transfer rate of 12 Mbps, while USB 2.0 has a rate of 480 Mbps.

In the world of telecommunications, the terms bandwidth and DTR are used interchangeably but have distinctive meanings. To ensure fast and reliable delivery of data, learn how to calculate the bandwidth requirements of a network.